Please understand I know what I'm doing and I know this works!!

I'm having issues using Rayon to parallelize some simple inner loops.

Here's the original code:

fn sozpg(val: usize, res_0: usize, start_num : usize, end_num : usize) -> Vec<usize> {

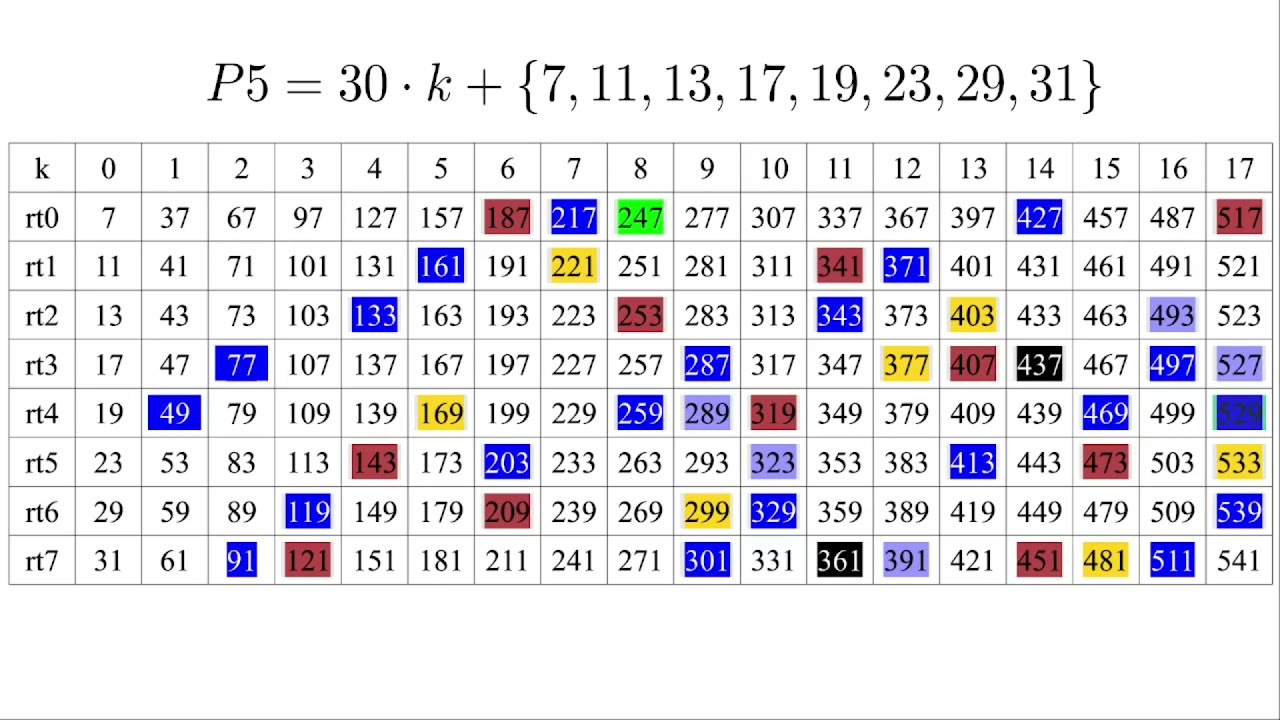

let (md, rscnt) = (30, 8);

static RES: [usize; 8] = [7,11,13,17,19,23,29,31];

static BITN: [u8; 30] = [0,0,0,0,0,1,0,0,0,2,0,4,0,0,0,8,0,16,0,0,0,32,0,0,0,0,0,64,0,128];

let kmax = (val - 2) / md + 1;

let mut prms = vec![0u8; kmax];

let sqrt_n = val.integer_sqrt();

let (mut modk, mut r, mut k) = (0, 0, 0 );

loop {

if r == rscnt { r = 0; modk += md; k += 1 }

if (prms[k] & (1 << r)) != 0 { r += 1; continue }

let prm_r = RES[r];

let prime = modk + prm_r;

if prime > sqrt_n { break }

for ri in &RES {

let prod = prm_r * ri - 2;

let bit_r = BITN[prod % md];

let mut kpm = k * (prime + ri) + prod / md;

while kpm < kmax { prms[kpm] |= bit_r; kpm += prime };

}

r += 1;

}

let mut primes = vec![];

for (k, resgroup) in prms.iter().enumerate() {

for (i, r_i) in RES.iter().enumerate() {

if resgroup & (1 << i) == 0 {

let prime = md * k + r_i;

let (n, rem) = (start_num / prime, start_num % prime);

if (prime >= res_0 && prime <= val) && (prime * (n + 1) <= end_num || rem == 0) { primes.push(prime); }

} } }

primes

}

I want to use Rayon (I'm already using it other places) to do this loop in parallel.

for ri in &RES {

let prod = prm_r * ri - 2;

let bit_r = BITN[prod % md];

let mut kpm = k * (prime + ri) + prod / md;

while kpm < kmax { prms[kpm] |= bit_r; kpm += prime };

}

Here's how I did it with Crystal, which works as intended.

def sieve(ri, md, k, prime, prm_r, kmax, bitn, prms)

kn, rn = (prm_r * ri - 2).divmod md

kpm = k * (prime + ri) + kn

bit_r = bitn[rn]

while kpm < kmax; prms[kpm] |= bit_r; kpm += prime end

end

def sozpg

...

loop do

if (r += 1) == rscnt; r = 0; modk += md; k += 1 end

next if prms[k] & (1 << r) != 0

prm_r = res[r]

prime = modk + prm_r

break if prime > Math.isqrt(val)

done = Channel(Nil).new(rscnt)

res.each do |ri|

spawn do sieve(ri, md, k, prime, prm_r, kmax, bitn, prms); done.send(nil) end

end

rscnt.times { done.receive }

end

After many attempts, I got this far to get only 1 error.

RES.par_iter().for_each ( |ri| {

let prod = prm_r * ri - 2;

let bit_r = BITN[prod % md];

let mut kpm = k * (prime + ri) + prod / md;

while kpm < kmax { *&prms[kpm] |= bit_r; kpm += prime; };

});

error[E0594]: cannot assign to data in a `&` reference

--> src/main.rs:177:26

|

177 | while kpm < kmax { *&prms[kpm] |= bit_r; kpm += prime; };

| ^^^^^^^^^^^^^^^^^^^^ cannot assign

For more information about this error, try `rustc --explain E0594`.

error: could not compile `twinprimes_ssoz` due to previous error

The compiler has been excellent in getting me down to just 1 error, but now

I'm in a loop trying to get it to allow prms to be shared and written into.

HELP!

I also want to do this in parallel too.

for (k, resgroup) in prms.iter().enumerate() {

for (i, r_i) in RES.iter().enumerate() {

if resgroup & (1 << i) == 0 {

let prime = md * k + r_i;

let (n, rem) = (start_num / prime, start_num % prime);

if (prime >= res_0 && prime <= val) && (prime * (n + 1) <= end_num || rem == 0) { primes.push(prime); }

} } }