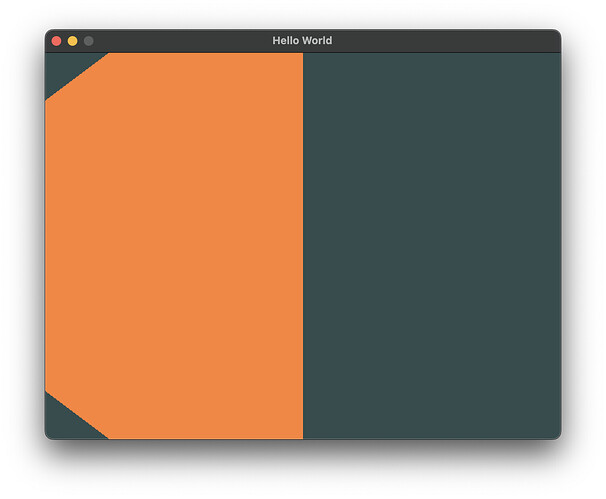

Simple program to draw a triangle. The problem is the axises are rotated by 90 degrees clockwise and are zoomed in.

This turns out to be a type problem going from f64 to GLfloat which is typed as a C float. Anyone know why this happens?

use gl::types::*;

use std::time::Duration;

use sdl2::event::Event;

use sdl2::keyboard::Keycode;

const VERTEX_SHADER: &str = r#"#version 330

layout (location = 0) in vec3 pos;

void main() {

gl_Position = vec4(pos.x, pos.y, pos.z, 1.0);

}"#;

const FRAGMENT_SHADER: &str = r#"#version 330

precision highp float;

out vec4 color;

void main(void) {

color = vec4(1.0, 0.5, 0.2, 1.0);

}"#;

fn main() {

let sdl_context = sdl2::init().unwrap();

let video_subsystem = sdl_context.video().unwrap();

let window = video_subsystem.window("Hello World", 640, 480)

.position_centered()

.opengl()

.build()

.unwrap();

let gl_attr = video_subsystem.gl_attr();

gl_attr.set_context_profile(sdl2::video::GLProfile::Core);

gl_attr.set_context_version(3, 3);

let gl_context = window.gl_create_context().unwrap();

let gl = gl::load_with(|s| video_subsystem.gl_get_proc_address(s) as *const std::os::raw::c_void);

let (width, height) = window.size();

let vertices = [

[-0.5, -0.5, 0.0],

[ 0.5, -0.5, 0.0],

[ 0.0, 0.5, 0.0]

];

// set viewport size

unsafe {

gl::Viewport(0, 0, width as i32, height as i32);

}

// load program

let program: GLuint;

unsafe {

let vertex_shader: GLuint;

let fragment_shader: GLuint;

// vertex shader

vertex_shader = gl::CreateShader(gl::VERTEX_SHADER);

gl::ShaderSource(

vertex_shader,

1,

&VERTEX_SHADER.as_bytes().as_ptr().cast(),

&VERTEX_SHADER.len().try_into().unwrap(),

);

gl::CompileShader(vertex_shader);

let mut success: GLint = 0;

gl::GetShaderiv(vertex_shader, gl::COMPILE_STATUS, &mut success);

if success == 0 {

let mut buffer: Vec<u8> = Vec::with_capacity(1024);

let mut log_len: GLint = 0;

gl::GetShaderInfoLog(

vertex_shader,

1024,

&mut log_len,

buffer.as_mut_ptr().cast(),

);

buffer.set_len(log_len as usize);

let message = String::from_utf8_lossy(&buffer);

panic!("Vertex Shader (len: {}) {}", log_len, message.trim());

}

// fragment shader

fragment_shader = gl::CreateShader(gl::FRAGMENT_SHADER);

gl::ShaderSource(

fragment_shader,

1,

&FRAGMENT_SHADER.as_bytes().as_ptr().cast(),

&FRAGMENT_SHADER.len().try_into().unwrap(),

);

gl::CompileShader(fragment_shader);

let mut success: GLint = 0;

gl::GetShaderiv(fragment_shader, gl::COMPILE_STATUS, &mut success);

if success == 0 {

let mut buffer: Vec<u8> = Vec::with_capacity(1024);

let mut log_len: GLint = 0;

gl::GetShaderInfoLog(

fragment_shader,

1024,

&mut log_len,

buffer.as_mut_ptr().cast(),

);

buffer.set_len(log_len as usize);

let message = String::from_utf8_lossy(&buffer);

panic!("Vertex Shader (len: {}) {}", log_len, message.trim());

}

program = gl::CreateProgram();

gl::AttachShader(program, vertex_shader);

gl::AttachShader(program, fragment_shader);

gl::LinkProgram(program);

let mut success: GLint = 0;

gl::GetProgramiv(program, gl::LINK_STATUS, &mut success);

if success == 0 {

let mut buffer: Vec<u8> = Vec::with_capacity(1024);

let mut log_len: GLint = 0;

gl::GetProgramInfoLog(

program,

1024,

&mut log_len,

buffer.as_mut_ptr().cast(),

);

buffer.set_len(log_len as usize);

let message = String::from_utf8_lossy(&buffer);

panic!("Program (len: {}) {}", log_len, message.trim());

}

}

// create buffers

let mut vao: GLuint = 0;

let mut vbo: GLuint = 0;

unsafe {

gl::GenBuffers(1, &mut vbo);

gl::GenVertexArrays(1, &mut vao);

gl::BindVertexArray(vao);

gl::BindBuffer(gl::ARRAY_BUFFER, vbo);

gl::BufferData(

gl::ARRAY_BUFFER,

(vertices.len() * 3 * std::mem::size_of::<GLfloat>()) as GLsizeiptr,

vertices.as_ptr().cast(),

gl::STATIC_DRAW,

);

gl::VertexAttribPointer(

0,

3,

gl::FLOAT,

gl::FALSE,

(3 * std::mem::size_of::<GLfloat>()) as GLint,

std::ptr::null()

);

gl::EnableVertexAttribArray(0);

gl::BindBuffer(gl::ARRAY_BUFFER, 0);

gl::BindVertexArray(0);

}

unsafe {

gl::ClearColor(0.2, 0.3, 0.3, 1.0);

gl::Clear(gl::COLOR_BUFFER_BIT);

gl::UseProgram(program);

// gl::UniformMatrix4fv(uniform_projection, 1, gl::FALSE, trans_matrix.to_cols_array().as_ptr() );

gl::BindVertexArray(vao);

gl::DrawArrays(gl::TRIANGLES, 0, vertices.len() as GLint);

window.gl_swap_window();

}

// event loop

let mut event_pump = sdl_context.event_pump().unwrap();

'main_loop: loop {

for event in event_pump.poll_iter() {

match event {

Event::Quit {..} |

Event::KeyDown { keycode: Some(Keycode::Escape), .. } => {

break 'main_loop

},

_ => {}

}

}

// render frame

std::thread::sleep(Duration::new(0, 1_000_000_000u32 / 60));

}

unsafe {

gl::DeleteVertexArrays(1, &vao);

gl::DeleteBuffers(1, &vbo);

gl::DeleteProgram(program)

}

}

Based off of Code Viewer. Source code: src/1.getting_started/2.1.hello_triangle/hello_triangle.cpp