I'm computing a hash of a graphics mesh object, to be used as a lookup key for combining duplicate objects. The hashing, with sha-256, is a major bottleneck. It's taking longer to hash the mesh to get a hash key than to build it up from the input data.

So here's the code:

pub type KeyHash = sha3::Sha3_256;

...

pub fn keytype_from_mesh_coords(mesh_coords: &MeshCoords) -> KeyType {

let mut hasher = KeyHash::new();

for v in &mesh_coords.vertex_positions {

hasher.update(&v[0].to_le_bytes());

hasher.update(&v[1].to_le_bytes());

hasher.update(&v[2].to_le_bytes());

}

for v in &mesh_coords.vertex_normals {

hasher.update(&v[0].to_le_bytes());

hasher.update(&v[1].to_le_bytes());

hasher.update(&v[2].to_le_bytes());

}

for ix in &mesh_coords.index_data {

hasher.update(&ix.to_le_bytes())

}

for ix in &mesh_coords.uv_data_array {

hasher.update(&ix[0].to_le_bytes());

hasher.update(&ix[1].to_le_bytes())

}

hasher.finalize_fixed()

}

/// 3-element vector, 32-bit values.

#[derive(Copy, Clone, PartialEq, Default, Serialize, Deserialize)]

pub struct LLVector3(pub [f32; 3]);

/// vector3 to 12 bytes (32 bit floats)

impl LLVector3 {

/// Field access

pub fn get_x(&self) -> f32 {

self.0[0]

} // because the built-in notation is awful

pub fn get_y(&self) -> f32 {

self.0[1]

}

pub fn get_z(&self) -> f32 {

self.0[2]

}

...

/// Serialize to bytes

pub fn to_le_bytes(&self) -> [u8; 12] {

let x = self.get_x().to_le_bytes();

let y = self.get_y().to_le_bytes();

let z = self.get_z().to_le_bytes();

[

x[0], x[1], x[2], x[3], y[0], y[1], y[2], y[3], z[0], z[1], z[2], z[3],

] // as 12 bytes

}

All that's going on here is that there are Vec of 2 and 3 element arrays of f32 values, and I want a hash of the entire Vec.

First, converting three floats to 12 bytes takes too much fooling around. It would be nice if .as_le_bytes() worked on fixed-size arrays, but it doesn't.

Second, sha-256 may be too slow for this application.

Suggestions? Safe code only, please.

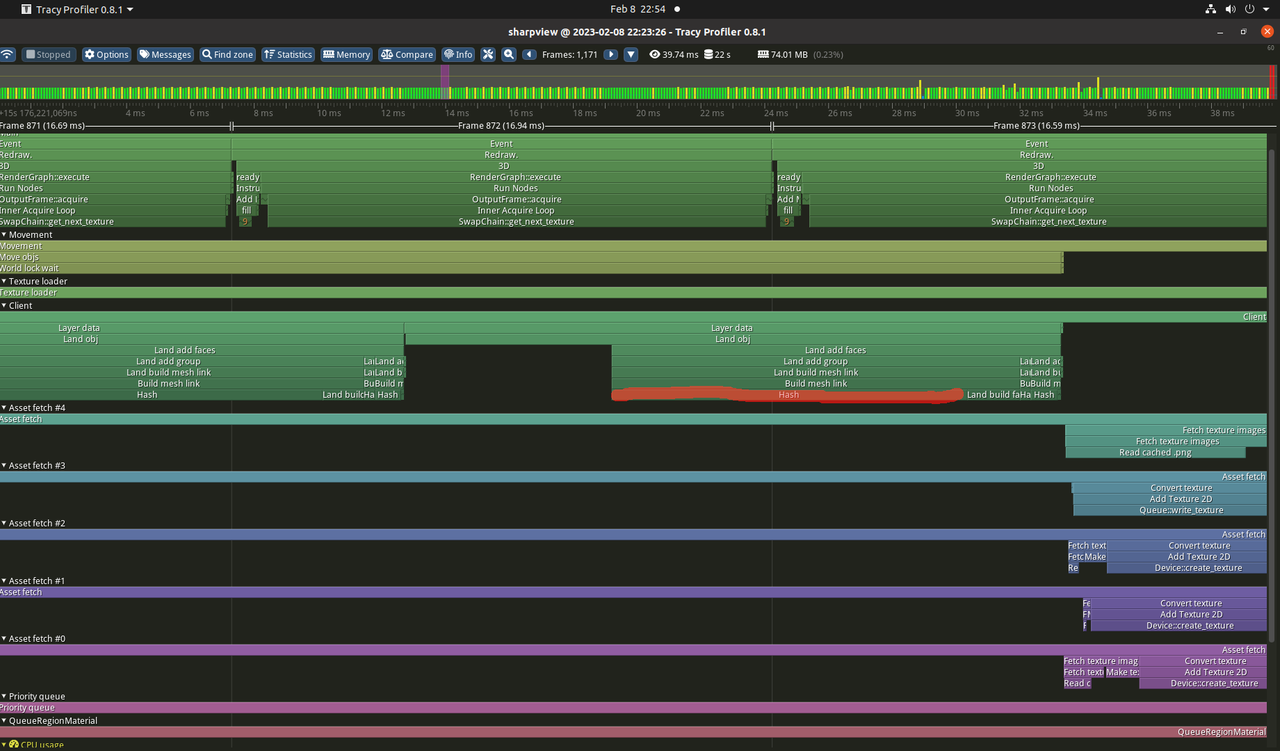

Here's a Tracy profile of the program showing how long this takes.