Does Rust do any kind of optimization for the case of 0 * f() where f() is a function returning an integer? Is Rust smart enough to just know that the result should be 0 without computing f? I'm asking this because I encountered this situation where f might be expensive to computer. I wonder whether I should do a manually if check to optimize the code.

Yes, but only if the f() doesn't do any side effect like printing something to the console. It's a bug if the optimization change the observable behavior of the program besides the performance.

Note that neither the Rust language nor the rustc compiler implementation implements it. It's the feature of the rustc's LLVM backend, the same optimizing compiler backend powers the clang C/++ compiler.

Edit: fixed the wrong example.

How about g() * f() where g() returns 0? Is the same optimization applied if f() has no side effect?

In your example even without *0 it returns 0, because (n * 4).pow(42) is either 0 or overflows i32.

Is the question about 0* explicitly in the code or in the case of a multiplication by a variable that may be zero?

Yeah I underestimated the power of the .pow(). You can fix it into small enough number like 3. Thanks for the correction!

My question is about g * f where both g and f and expression returning i64. g is possibly 0 while f might be expensive to compute. I wonder whether there are optimizations done (avoid calculating f) for the case when g is 0.

It's highly unlikely that compiler will perform such optimization. Even if compiler has properly derived that both functions are pure, it would have to estimate runtime cost of each function (e.g. situation may be reversed: f can be cheap and g expensive or both functions may be equally expensive). So you have to implement this optimization manually using your own knowledge of function properties.

Once you understand the optimizations involved, you can reason about this kind of questions in a broader sense. Bluntly asking whether the optimizer is smart enough to do what you think likely ends in finding out that the optimizer has already outsmarted you and has considered all the subtleties and edge-cases that you didn't think of.

The first optimization that is relevant is inlining. Whenever the LLVM optimizer encounters a function call, if it feels like it (depending on a number of factors), it may replace the function call with a copy of the entire function body. In Rust it's very common to write even rather basic logic as a big hierarchy of functions calling into other functions, and the entire hierarchy to be flattened through inlining, and the resulting flat function to be optimized again to remove any redundant instructions.

Another relevant optimization is multiplication by zero. If after applying inlining and other optimizations, there is a multiplication by zero, this multiplication can be removed, leaving only the zero. Even if one of the operands has side effects, the multiplication can still be removed, as the operands are not yet removed. Removing the multiplication may cause a cascade of other optimizations to be applied, as the operand of the multiplication may now be unused, and the operand itself may be calculated from something that in turn is also unused. Instructions will then be removed until an instruction that has side effects is encountered.

What you seem to be asking however, is in the case when it cannot be determined on compile time that it's a multiplication by zero, whether a runtime check of the left hand side will be added to lazily compute the right hand side only when the first operand is non-zero. From the optimizer's point of view, that's a pessimization, not an optimization. The optimizer strives to reduce the number of branches, not add extra branches where none is needed. If you know that the computation is expensive enough and the number zero will come up often enough to justify an extra branch, you have to add it explicitly. The optimizer cannot do that decision for you.

Even though the general rule is that the optimizer tries to avoid branches, the optimizer is too subtle to be defined by such a rule. There's a chance that if the right hand side happens to already contain a branch that coincides with the left hand side being zero, this case would be optimized separately, immediately evaluating to zero. Always consult Godbolt when in doubt.

Thank everyone for the great and inspiring discussions here! It helps me a lot to understand how rustc/LLVM optimization works. Goldbolt is awesome! Rust community i awesome!

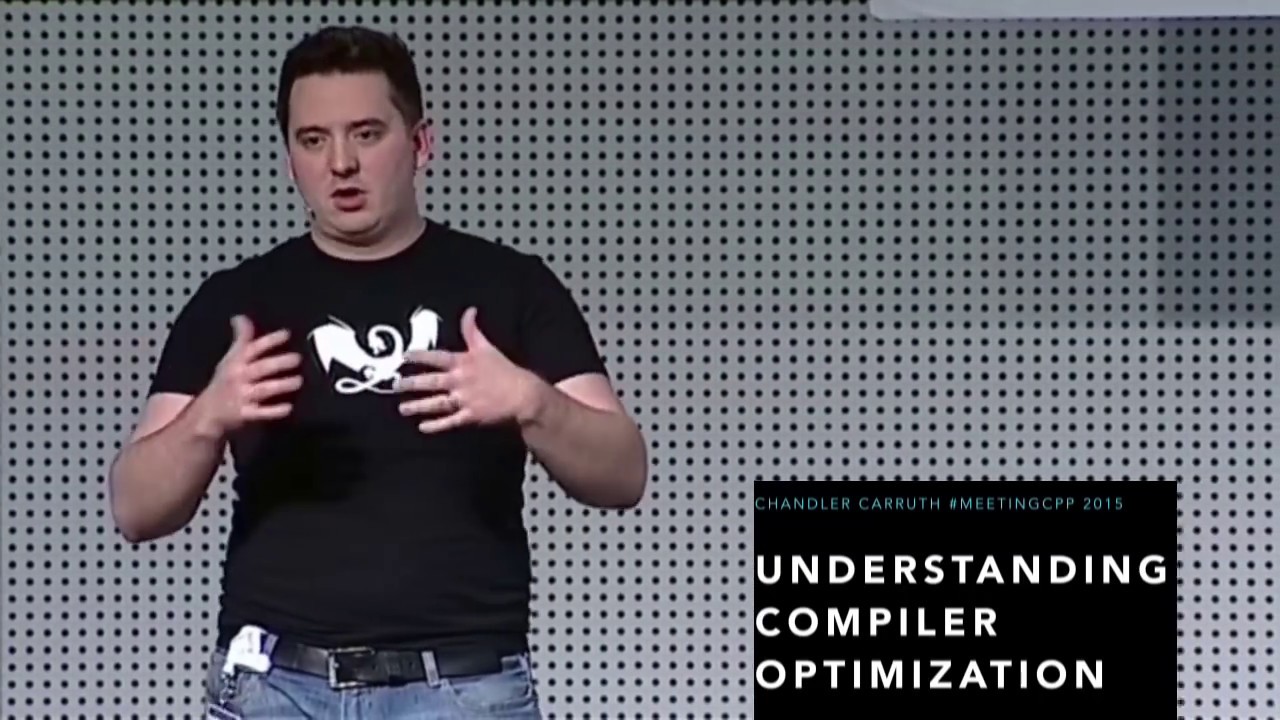

If you're curious to go deeper down the rabbit hole, I recommend this talk:

(It uses C++ as the frontend, but the important part is the LLVM stuff, which is the same for Rust.)

This topic was automatically closed 90 days after the last reply. We invite you to open a new topic if you have further questions or comments.